Two days ago, I pointed out how Node.js, an event-driven web framework,

will eat it hard if it's given any nontrivial amount of CPU work to do in its request handler. After I published that,

it seemed that the point of the article went sailing right past the Node.js camp, who proceeded to see how

fast they can make a Fibonacci number generator.

Two days ago, I pointed out how Node.js, an event-driven web framework,

will eat it hard if it's given any nontrivial amount of CPU work to do in its request handler. After I published that,

it seemed that the point of the article went sailing right past the Node.js camp, who proceeded to see how

fast they can make a Fibonacci number generator.

The Fibonacci function was arbitrary. It was inefficient on purpose. I needed a function that would use CPU time, and chose that because it's familiar and easy to implement. So, now I offer a more formal analysis of what CPU usage does to the throughput of an event-driven system as compared to a threaded system.

Since it's now clear that reading comprehension and critical thinking are not strong suits of the Node.js programmer, I would suggest that all Noders reading this article read it aloud, slowly and loudly, like an American tourist trying to find a train station in Tokyo. Furthermore, to assist the Node camp, I will highlight the important parts in large lettering, like this:

When the weather is threatening rain, bring an umbrella with you.

When the weather is threatening rain, bring an umbrella with you.

A Math Model of Throughput

Assume we've got a request handler that processes an HTTP request and sends back the result. Let's see how threads and event loops differ on processing these requests. Note that we're measuring throughput here, not latency. That's an article for another day.

This is an analysis of Queries Per Second (QPS) only.

This is an analysis of Queries Per Second (QPS) only.

Let's start with some definitions:

- Let C be the amount of CPU time used by the handler, in milliseconds.

- Let I be the amount of I/O time used by the handler, in milliseconds.

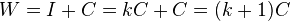

- Let W be the wall clock time it takes for a handler to execute. By definition, W = I + C

- Let N be the number of threads running in the threaded system.

- Let E be the throughput of the event driven system.

- Let T be the throughput of the threaded system.

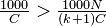

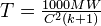

Given that the times are measured in milliseconds, we can define  and

and  .

.

Since the wall time W is expressable in terms of CPU time C and I/O time I,

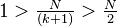

and considering that both C and I are positive, nonzero, it is helpful define  , with the factor k expressing the relationship between C and I.

, with the factor k expressing the relationship between C and I.

It follows then that  and

and  .

.

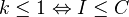

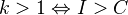

THEOREM 1. When a handler takes more CPU time than I/O time, an event-driven system has greater throughput than a threaded system if and only if the threaded system has exactly one thread.

PROOF (partial). (note: for brevity, I will only prove one direction. The other direction is an exercise left for the reader.)

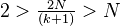

Suppose

Simplifying the inequality,

Given that  , we can bound the inner term

, we can bound the inner term

Further simplifying

Since N is integral and nonzero, it follows that  .

.

If you do more CPU than I/O, use threads.

If you do more CPU than I/O, use threads.

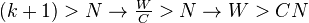

THEOREM 2.When the handler takes more I/O time than CPU time, an event-driven system has greater throughput than a threaded system if and only if  .

.

PROOF (partial).(note: again for brevity, I will prove one direction).

Given our previous construction,

and the alternate expression

it follows that  .

.

If you do more I/O than CPU, use more threads.

If you do more I/O than CPU, use more threads.

A Practical Example

Let's suppose you have a request handler that does 10 milliseconds of CPU work and 50 milliseconds of database I/O. Would you choose threads or events?

I this case, the theoretical maximum throughput of the event driven system is 1000/10 = 100 QPS, where as a threaded system with 50 threads has a theoretical maximum throughput of 50,000/60 = 833.33 QPS. Of course, in the threaded case, you need to worry about being bound by the CPU, but given the number of cores on modern hardware, threads seems like a winner here.

Multiple Event Workers

The Noders got really into this one: forking "workers" from your event loop to do the heavy CPU work, and having them call back to the event loop when they're done. One parent process coordinates work among many children? Where have I heard that before?

Anyhow, let's extend the model to that case. Just for funsies.

Since your asynchronous processes do not block on I/O, at full utilization, they will theoretically take 100% of the CPU. Therfore, the number of worker processes to spawn must be equal to the number of CPUs in the system to avoid oversubscribing the machine. Let's introduce a new variable, M, to represent the number of CPUs in the computer.

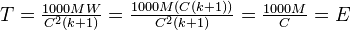

The throughput formula for the event driven system therefore becomes

Now, with threads, we also need to avoid oversubscribing the CPU. Considering that during a single handler execution, only C milliseconds of CPU are used, it follows that the number of threads that will achieve theoretical maximum utilization is  .

.

Our formula for the threaded system's throughput is therefore

...but look at this:

At full utilization, threads and events have the same theoretical throughput.

At full utilization, threads and events have the same theoretical throughput.

This makes intuitive sense, as if the CPUs are working as hard as they can, all else equal, they should yield the same performance regardless of the framework used.

Hold up, this does not prove that Node is good.

Hold up, this does not prove that Node is good.

Of course, in a practical setting, threads have a greater memory overhead, and programming an event loop with multiple workers just seems silly, as if you're doing that much CPU work in an event looped system, you've already fucked up somewhere, so why add to it?

Node.js Is Still Cancer

So, let's review.

Suppose you're a less-than-expert programmer, which Node seems to attract in droves for some reason. You are using Node for the supposed "scalability" of it, but as we have just seen, threaded programming, which is easier to understand than callback driven programming, meets or exceeds the asynchronous model in the vast majority of cases. Chances are, you're not going to be forking worker processes to do CPU jobs, what with the less-than-expert and all.

Therefore, the reason you're using Node is not a lack of technical ability, it's because all the cool kids are doing it.

Node.js is a danger to novice programmers.

Node.js is a danger to novice programmers.

Next, suppose you're an expert programmer, and you've got some CPU bound work that you fork off to child processes to keep your event loop trucking. OK man, how complicated do you want to make this thing? At full capacity, you're at par with threads, provided it's not memory bound. At this point, you are less focused on solving the problem at hand than you are on coming up with something you can blog about and get on programming Reddit.

If you're forking workers in Node, you've got bigger problems.

If you're forking workers in Node, you've got bigger problems.

Plus, it's fucking JavaScript ... on the server.